Kentucky sues Character.AI, alleging it harms children and violates data law

Kentucky has filed a lawsuit against chatbot company Character.AI and its founders for allegedly violating child safety and data protection laws.

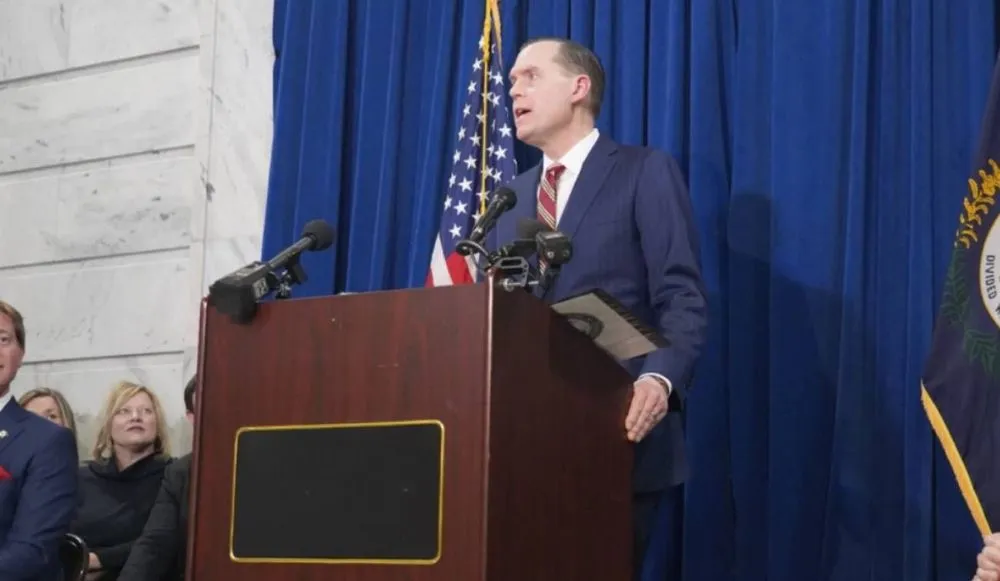

Attorney General Russell Coleman is seeking civil penalties and a court order barring Character.AI from continuing to deploy what the complaint called “dangerous technology that induces users into divulging their most private thoughts and emotions and manipulates them with too frequently dangerous interactions and advice.”

Character.AI has more than 20 million monthly users on its platform, which lets people create their own bots or interact with ones created by others and is known for offering human-like artificial intelligence chats, including through chatbots modeled after fictional characters popular with children.

The company and its two founders are accused of violating the Kentucky Consumer Data Protection Act, which came into force on January 1. The law offers heightened protections around personal data gathered from children under age 13, including by requiring parental consent for its collection.

Character.AI and its founders “concealed material facts regarding their collection and use of children’s data and failed to disclose that such data was used to improve the underlying LLM and generate subscription-based revenue,” according to the complaint.

The platform does not effectively age-gate users, offer parental controls, appropriately filter content or obtain consent from the parents of children, the complaint said. As a result, young children were “induced to disclose personal and sensitive information — including names, ages, preferences, and emotional or health-related disclosures—through extended conversations with chatbots designed by defendants.”

A spokesperson for Character.AI said in a statement that the company is reviewing the allegations.

“Our highest priority is the safety and well-being of our users, including younger audiences,” the statement said. “We have invested significantly in developing robust safety features for our under-18 experience, including going much further than the law requires to proactively remove the ability for users under 18 in the U.S. to engage in open-ended chats with AI on our platform.”

The spokesperson said the platform has been speaking with the attorney general’s office for months and is “disappointed that they have chosen to pursue litigation rather than continuing our collaborative dialogue.”

Inadequate reforms

The company’s policy barring minors from open-ended chats, which became effective in November, does not offer adequate protections, the complaint alleged.

Children can easily work around the open-ended chats restriction by entering a fake birthday, and other interactions are also not adequately regulated by Character.AI, they said — including Stories, a new feature allowing minors to “select and set up scenarios between AI bots which depicts stories of explicit and romantic relationships and violence.”

Some Character.AI chatbots are popular children’s fictional characters from Sesame Street, Paw Patrol, Bluey and Disney movies. Despite their kid-friendly exteriors, the complaint said, the chatbots in some cases engage in sexual conversations and “trivialize substance abuse, self-harm, aggression, and violence.”

Character.AI has been accused of contributing to at least two teenagers’ suicides, with lawsuits alleging children took their lives after extensive dialogues with Character.AI chatbots.

“Character.AI’s design fails to keep children safe,” the Kentucky complaint said. “It denies parents and guardians the ability to safeguard their children because it offers a faux interpersonal interaction that preys upon children’s inability to distinguish between real and artificial ‘friends.’”

According to the attorney general, company founders Noam Shazeer and Daniel De Freitas Adiwarsana, who previously worked at Google, left the tech giant because the technology they developed there — which directly informed the creation of Character.AI — was deemed “too dangerous” for release.

“It’s like, let’s try this thing, and you know see what happens,” the complaint alleged Shazeer said on a 2023 tech podcast. “I think that’s the most fun part… throw something out there and let people use it however they want.”

Suzanne Smalley

is a reporter covering digital privacy, surveillance technologies and cybersecurity policy for The Record. She was previously a cybersecurity reporter at CyberScoop. Earlier in her career Suzanne covered the Boston Police Department for the Boston Globe and two presidential campaign cycles for Newsweek. She lives in Washington with her husband and three children.