ChatGPT Health feature draws concern from privacy critics over sensitive medical data

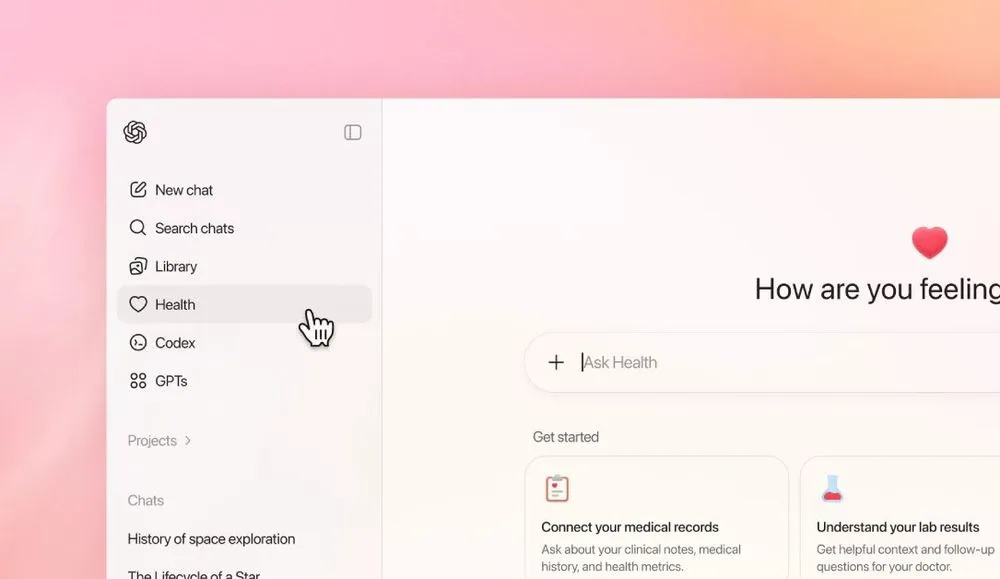

OpenAI’s announcement Wednesday of a new ChatGPT Health program included an emphasis on how the company will safeguard users’ sensitive medical information, highlighting what experts say will be one of the next big battlegrounds in data privacy.

The artificial intelligence juggernaut in a blog post encouraged its hundreds of millions of users to connect medical records and wellness app data to the new health-focused chatbot feature, adding that it will be outfitted with extra data privacy protections.

“ChatGPT Health builds on the strong privacy, security, and data controls across ChatGPT with additional, layered protections designed specifically for health — including purpose-built encryption and isolation to keep health conversations protected and compartmentalized,” the blog post said.

Information shared with ChatGPT Health also will not be used to train “foundational models,” according to the blog post.

More than 230 million people worldwide already ask ChatGPT health and wellness questions each week, the blog post said, underscoring what the company says is strong consumer demand for a dedicated health product.

But health information is uniquely sensitive, advocates said, and data shared with ChatGPT Health will not be protected by the Health Insurance Portability and Accountability Act (HIPAA), a landmark health data privacy law.

Individuals sharing their electronic medical records with ChatGPT Health “would remove the HIPPA protection from those records, which is dangerous,” said Sara Geoghegan, senior counsel at the Electronic Privacy Information Center.

The U.S. has no comprehensive privacy law, which creates real risks for people sharing health data with OpenAI, Geoghegan said, because the company can ultimately do what it wants with the sensitive health data it collects.

“ChatGPT is only bound by its own disclosures and promises, so without any meaningful limitation on that, like regulation or a law, ChatGPT can change the terms of its service at any time,” Geoghegan said.

The issue has come into focus in recent months due to the 23andMe bankruptcy. After sharing their highly sensitive genetic data with 23andMe, many users were alarmed when they found out that that data was being offered to the highest bidder as part of a bankruptcy sale.

OpenAI’s business model is also evolving in ways that could give it an incentive to leverage the health data it receives to drive profits, advocates say.

"While OpenAI says that it won’t use information shared with ChatGPT Health in other chats, AI companies are leaning hard into personalization as a value proposition,” said Andrew Crawford, senior counsel at the Center for Democracy and Technology.

“Especially as OpenAI moves to explore advertising as a business model, it’s crucial that separation between this sort of health data and memories that ChatGPT captures from other conversations is airtight."

Ultimately, OpenAI’s announcement about ChatGPT Health leaves many vital health data privacy questions unanswered, advocates say.

It is especially critical for consumers sharing reproductive health information with the chatbot to proceed with caution, Crawford said, because OpenAI has said nothing about the processes it will use to determine when to share health data with law enforcement.

“How does open AI handle [law enforcement] requests?” Crawford asked. “Do they just turn over the information? Is the user in any way informed? There's lots of questions there that I still don't have great answers to.”

In addition to electronic health records, ChatGPT Health will be able to draw on data collected from numerous wellness apps, the blog post said.

Apple Health, MyFitnessPal and Weight Watchers are among the health apps which can be connected to the chatbot. OpenAI is partnering with b.well, which it said is the biggest and most secure network of “live, connected health data” for consumers, to power the feature.

Suzanne Smalley

is a reporter covering digital privacy, surveillance technologies and cybersecurity policy for The Record. She was previously a cybersecurity reporter at CyberScoop. Earlier in her career Suzanne covered the Boston Police Department for the Boston Globe and two presidential campaign cycles for Newsweek. She lives in Washington with her husband and three children.