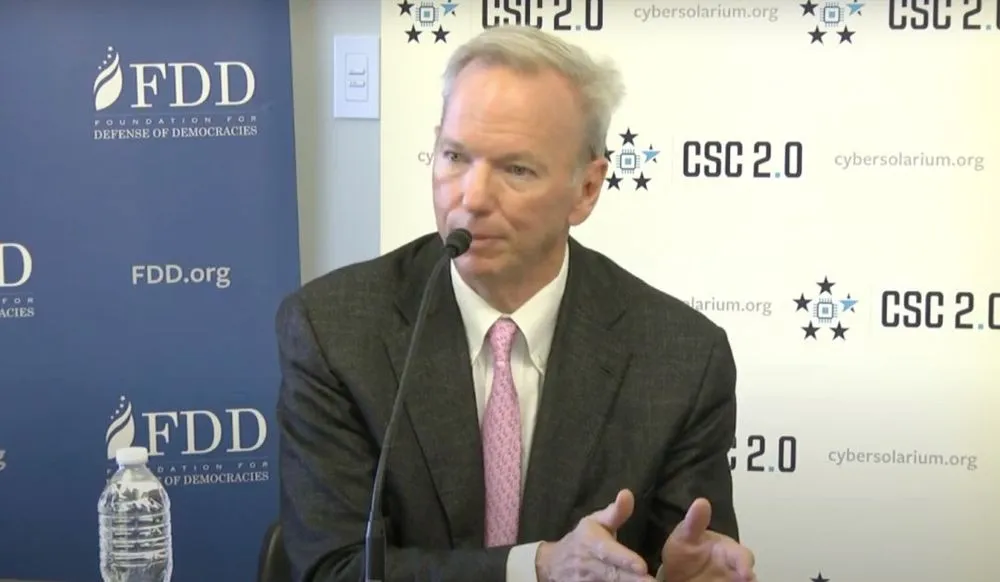

US needs an AI czar to help regulate risky technology, ex-Google CEO Eric Schmidt says

The former CEO of Google and chairman of a Congressionally-chartered commission studying the implications of artificial intelligence for national security said Wednesday that he believes most governments are “screwing it up” when it comes to regulating the powerful technology.

Eric Schmidt, appearing at a panel discussion alongside Cyberspace Solarium Commissioner Rep. Mike Gallagher, (R-WI), said he is focused on preventing AI from causing vast or existential harm.

“There are scenarios in the future where AI as it evolves could be such a danger — it’s not today,” Schmidt said, adding that too often governments focus on the wrong AI threats. “I would suggest that we not try to regulate the stuff that we all complain about until we have a more clear target.”

Schmidt condemned the European Union’s pending EU AI Act which he said is so restrictive that it will prevent the use of open source software in any form for AI. He said the U.S. should try to “define an actual problem and then try to work on how to solve that problem.”

He recommended the U.S. create the equivalent of an AI Czar.

“If you want something to happen at scale there needs to be a concerted owner,” he said.

Schmidt said the U.S. government’s approach to confronting AI’s national security implications has been dysfunctional, with the military being risk averse and often at odds with Congress on funding priorities..

He recalled interviewing a senior military leader about the problem as part of his commission work.

“I said, ‘If you’re such a big cheese why can’t you get 50 people to work on the software problems that we have identified,” Schmidt said. “And he said, ‘I tried.’ And I said, ‘Well, what happened?’ And he said, ‘They were appropriated away from me.’”

Gallagher said there is no easy solution to the problem. He said he doubts whether traditional international arms control frameworks are useful tools for AI governance, calling it essential to first examine the AI guardrails the Defense Department has in place and whether they can be shored up and extended across the U.S. government.

The former Google CEO also repeatedly cited the threat posed by AI-driven misinformation.

“I’m calling the alarm, I’m saying right now that it’s going to happen, get our act together,” Schmidt said.

Schmidt also said there is a looming privacy threat embedded in AI. He said that since ChatGPT and other AI models’ training is now fairly static he is not worried about the current state affairs. But he said that threat will change.

“When these systems — which is not today — don’t hallucinate, can learn continuously and have really good memory which they don’t have today,” Schmidt said, AI will also pose a significant threat to individual privacy.

Suzanne Smalley

is a reporter covering digital privacy, surveillance technologies and cybersecurity policy for The Record. She was previously a cybersecurity reporter at CyberScoop. Earlier in her career Suzanne covered the Boston Police Department for the Boston Globe and two presidential campaign cycles for Newsweek. She lives in Washington with her husband and three children.