Deepfake news anchors spread Chinese propaganda on social media

In a series of videos posted on Twitter, Facebook and YouTube, Chinese state-aligned actors used AI-generated broadcasters to distribute content that promotes the interests of the Chinese Communist Party, according to a new report.

At first glance, the news presenters of the likely fictitious media company Wolf News look like real people, and researchers with the social media analytics firm Graphika initially thought they were paid actors.

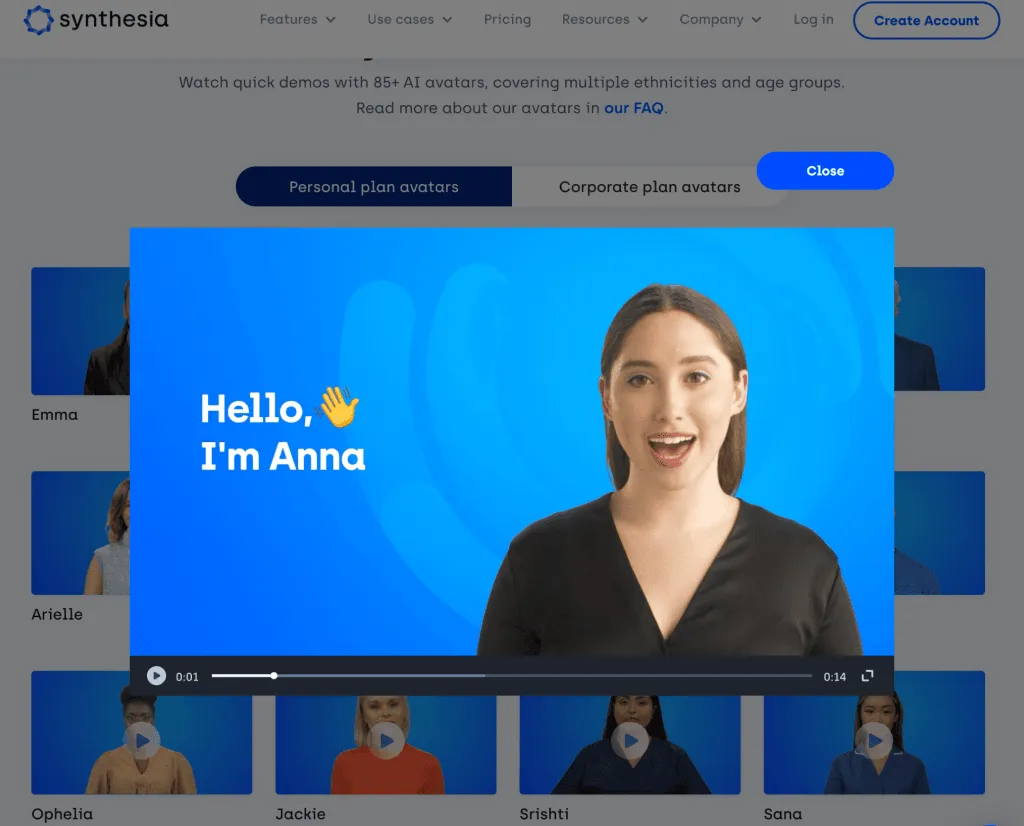

But further investigation revealed the Wolf News presenters were “almost certainly” created using technology provided by a British AI video company called Synthesia, which recently confirmed that its technology was used to create AI-generated videos promoting pro-military propaganda in Burkina Faso.

The fake news anchors used in the Chinese campaign are featured on Synthesia's website — the female avatar is listed as “Anna” and the male avatar is named “Jason.” They have also been used in other promotional videos unrelated to China. Synthesia did not respond to a request for comment about the report, and its website says it will not offer its software for public use and will review all content before its “trusted customers” publish it.

In the new campaign, the avatars mostly promoted the interests of the Chinese Communist Party — one video accused the U.S. government of attempting to tackle gun violence through “hypocritical repetition of empty rhetoric.” Another stressed the importance of China-U.S. cooperation for the recovery of the global economy. Both videos used the branding of what researchers said is likely a fake media company — a gray-and-white logo with a silhouette of a wolf.

Graphika, which has investigated similar pro-Chinese political spam operations since 2019, said the newly discovered fake videos have many characteristics similar to older ones: they are under three minutes long, use a compilation of stock images and news footage from online sources, and are accompanied by robotic English-language voiceovers.

Despite the effort put into it, the influence operation likely had limited impact: the AI-generated videos were low-quality and received less than 300 views each, Graphika said.

But, the so-called ‘spamouflage’ campaign raises concerns about the abuse of commercially-available AI products to create deceptive content at greater scale and speed — Synthesia, for example, can create AI-generated videos in minutes for as little as $30 per month. Journalists, researchers, and government officials have warned that countries including Russia and China are already leveraging similar technology in their operations.

In the latest campaign, Chinese actors used commercial services rather than their own products, suggesting that they are more likely to use tools most readily available to them, Graphika said. Still, researchers predict that threat actors will continue to experiment with AI tech, producing increasingly convincing footage that is harder to detect and verify.

Daryna Antoniuk

is a reporter for Recorded Future News based in Ukraine. She writes about cybersecurity startups, cyberattacks in Eastern Europe and the state of the cyberwar between Ukraine and Russia. She previously was a tech reporter for Forbes Ukraine. Her work has also been published at Sifted, The Kyiv Independent and The Kyiv Post.